Drugs currently in clinical trials will likely not be impacted by AI

6.4k words, 30 minutes reading time

Foreword: Just a reminder: this is an 'Argument' post. All of them are intended to have a reasonably strong opinion, with mildly more conviction than my actual opinion. Think of it closer to a persuasive essay than a review on the topic, which my Primers are more-so meant for. Do Your Own Research applies for all my posts, but especially so with these.

Also, I’m extremely appreciative to everyone I spoke to for this essay, but especially grateful to Bioengineering Bro, Alex Telford, and Frederick Peakman.

Introduction

I’ve been thinking a lot about timing lately. The drug development lifecycle is quite unlike many industries; the vast majority of the initial experimentation gets to happen in a comparatively brief 4-7 year period (preclinical), and then one must endure an, on average, 10.5 year period of waiting around to see if the experimentation actually works (clinical trials). During this time, there are no changes to the base chemical allowed, only dosages, patient population, and trial structure. If the drug works, the company stands to reap billions. If it doesn’t, it often loses an equivalent amount.

Very few other sectors in the world have this particular type of risk profile.

What impact does this particular phenomenon have on the types of businesses that work and the types that do not work for the pharma world? In this essay, I put forwards the thesis that AI startups that hope to sell to drug development companies—whether that is services or products—will be unlikely to be useful to any therapeutic currently in the clinical stage. To be of any use at all, they must focus in their efforts on preclinical work, with the hopes that it translates to decisions important at the clinical stage. And that it is a direct result of how extremely high stakes the drug development process is.

To be clear, this isn’t actually that controversial of an argument. Really, I can’t really think of any biotech startup today that is actively going against the argument I’m making here. So, to some degree, this thesis is obvious, but it was helpful for me to write out, so it may be helpful to read.

First, I will start with a fictional story over clinical trial interpretation (section 2), say that an AI-optimist ending to that story is unlikely (section 3), work through with the common arguments against my point (section 4), and then end that fiction with what I believe the true conclusion would be (section 5). Finally, I will offer what I think is the strongest argument against the thesis of this article (section 6). As an addendum, I’ll cover one of the biggest phase 3 drug failures to have ever occurred, and check whether an agentic literature-review platform (FutureHouse’s) could’ve detected it (section 7).

I’ve been (endearingly, I hope) told my essays can have a strange, wandering structure. One early reader of this essay said this:

I read it first and I was like eh 6-7/10, not sure what you're trying to say. But after reading it again I like it a lot, 9/10.

Take from that what you will. It may be worth revisiting sections if things initially feel unclear.

Let’s start!

A story

Let’s say you work near the top of a biotech startup. Times have been good to you — you’ve helped launch several drugs at past roles in big pharma, a feat that few people in the world can match, maybe even one of them becoming a blockbuster. As befitting an individual of your talents, you’ve joined a startup and now have been offered a chance to decide upon what is likely the most singularly important choice that exists in any pharmaceutical company.

You get to choose which drug gets the green light.

What does ‘green light’ mean here? For our purposes, it doesn’t matter, it can be anything; pushing the drug to phase 3 trials, repurposing a shelved asset, whatever. As with all green lights found in a pharmaceutical company, the important part for our story is that whatever decision you make will cause the gargantuan wheels of your organization to slowly shift to accommodate it, thousands of man-hours and millions of dollars being spent to grease the wheels of your choice. So, it’s quite important for you, your employees, and an uncountable number of potential patients, that you get this decision right.

To make it concrete, let’s consider the following decision: whether you should push a Phase 2 asset forwards to Phase 3. The asset in question is publicly called AEJ-2399, a peptide-based therapeutic meant to treat a rare inflammatory condition.

As far as anybody can tell, the data is strong: statistically significant efficacy and a mostly-clean safety profile. From the many hundred-page market analyses thrown your way over the last few months, the economics seem great as well — a favorable reimbursement landscape and a many-fold return on the hundreds of millions in investment on the chemical.

Really, it’s an easy decision. You announce the decision to move forwards. You expect nods of approval, and nothing more. Then you get an urgent call from your head of clinical development who claims to have discovered a strange trend in the data.

“What kind of trend?” you ask.

A sigh from the other end of the line. “An imbalance in major adverse events in a specific subgroup. It didn’t show up in the primary analysis, but when we stratified by a baseline biomarker level…”

“Which biomarker?”

“HGF. Patients with high baseline hepatocyte growth factor had a nearly threefold increase in serious adverse events.”

You sit back and think. HGF wasn’t originally a focus of the program, but now that you think about it, you recall an old preclinical report suggesting the drug might have off-target interactions in pathways associated with fibrosis and vascular remodeling. But there are always off-target effects for every drug, you can’t be expected to consider every one of those reports.

“Okay. Okay. What’s the specific recommendation here? Exclude high-HGF patients from phase 3?”

“Well, the issue is that this trial wasn’t powered for subgroup analyses. If we were paranoid, we’d exclude patients. If we were optimistic, we wouldn’t. Given the data we have, it’s a bit of a coin flip.”.

A headache ripples through your skull.

A day later, you’re in a boardroom with ten senior leaders, staring at a slide deck. The first few slides recap the safety signal, then come slides from research explaining why it’s probably just noise, then a slide from regulatory explaining why the FDA won’t see it as noise, then a slide from commercial showing how sales projections implode if you lose the broad label, then a slide from finance showing how much money has already been sunk into this program.

Then the real discussion starts.

The researchers argue that “the biological mechanism doesn’t support an HGF-mediated toxicity, so the signal is probably spurious, the old preclinical report has a hazy relationship to the current result”. The regulator team argue that “while post-hoc analyses shouldn’t be over-interpreted, ignoring a threefold increase in adverse events is the kind of thing that lands companies in lawsuits” and that “statistical significance isn’t the FDA’s only criterion—if the agency thinks there’s a safety risk, they will kill this drug”. The commercial team argues that the potential value is “still too high to walk away without more data”. The finance team reminds everyone how much money is on the line.

You ask the obvious question, since it seems like nobody else will. “Okay. Okay. Um. Can’t we just directly test out HGF-mediated toxicity cheaply in some way?”

Research looks more exhausted than you’ve ever seen them. “Well, we could run a targeted preclinical study. Maybe take some liver and vascular cell models, expose them to AEJ-2399, and measure any HGF-related pathway activation. Would take a few weeks, maybe a month. There isn’t a good animal model for this stuff, so in-vitro is all we have, and who knows if the results would actually be useful.”

A member from regulatory frowns. “That might help with mechanism, but it won’t tell us whether this is a real clinical safety risk. The FDA won’t care about mechanistic speculation when they have actual adverse event data in patients.”

A clinical development head from research leans forward. “We could reanalyze stored patient samples from the Phase 2 study and see if HGF levels correlate with other biomarkers of risk. If we see a consistent pattern, like, say, increased fibrosis markers in the high-HGF subgroup, that would support a real effect. Could be done in a few weeks, depending on sample availability.” A toxicity head from research cuts in, “That won’t work, the stored samples weren’t preserved for fibrosis marker analysis. They were processed for pharmacokinetics, not histopathology. The results would not at all be trustworthy.”

“Or” the commercial lead cuts in, “we could just run a small, targeted Phase 2b study, stratified for HGF, before going all-in on Phase 3.”

The chief finance officer doesn’t even look up from his phone. “We don’t have budgeted runway for another Phase 2b. If we delay Phase 3, we lose our fast-track designation. Which means another two years before approval, which means there’s a pretty good chance we’ll need to raise another round at awful terms. Or simply go bankrupt.”

Everyone stares back at you.

And then you have to make the call. You look around the room. Everyone has an opinion. No one agrees. And you realize that this entire process is insane. You have a billion-dollar decision to make, and you are making it based on who argues most convincingly in a PowerPoint presentation.

You schedule another meeting for tomorrow. Maybe things will be clearer then. If not then, maybe another meeting will help. You’ll get to the bottom of this eventually.

A mistake on my end

At this point in the story, if you are a regular reader of this blog, you may instinctively think AI is useful here. It must be! AI will pull all the biology/commercial/financial/etc. threads together into one piece and present a nice picture for the poor, addled executive to think over. Not even molecular models! Simple natural-language models would be sufficient for this task.

I thought this too! There are lots of pieces about the preclinical utility of AI, much less about what happens after that. So naturally I assumed all sorts of weird, strange, and scientifically interesting challenges arose when interpreting or conducting a clinical trial, just as they arose during preclinical work, and that advances in ML would dramatically alter how efficiently and accurately things could move.

And so I wrote an entire essay arguing for the potential of AI in clinical-stage drugs. It was 7,400~ words! At the end of that first story, I envisioned a world where an agentic model sat alongside the executives during these green light meetings and could say stuff like this:

The model replies instantly. "You mentioned that the tissues aren’t preserved for histopathology,” it begins, “but, from looking at the original trial SOP’s, several of the stored serum samples were processed under cold-chain protocols compatible with multiplex immunoassays if you’d be okay running those. At least 38 patients have viable serum aliquots available, 12 of which have the high-HGF phenotype.”

It pauses, then suggests: “You could run an immunoassay panel. Select a few vascular biomarkers, VCAM-1, ANGPT2, ELAM-1, ET-1, along with a few inflammation controls like IL-6 and TNF-α. These all are literature-validated indicators of endothelial activation, angiogenic imbalance, and vascular stress. Total cost per sample would be under $300, and most vendors can return results in 7 to 10 business days. Since the samples are retrospective and anonymized, the IRB burden would also be minimal. I’d estimate the projected total cost to be just shy of $14,000. I just sent an email to eight CRO’s you’ve used in the past to confirm these details and cc’d everybody in this room.”

As research begins to ask what the purpose of the assay is, the model loads up its next set of tokens: “If elevated levels of these markers are specifically enriched in high-HGF patients who experienced the adverse events, it would support the hypothesis that AEJ-2399 worsens something in a subgroup. It wouldn’t be definitive, but it avoids the concerns you have with in-vitro and animal model tests.”

And the scientists would marvel in awe at something that could surpass themselves in understanding the full scope of the problem and suggest intelligent ways for them to de-risk hiccups in the clinical stage development process.

But at the end of it, it felt off. It gave me alarm bells. I’ve never even touched clinical-stage stuff, all my work has been preclinical, so I had no mental scaffolding for how the drug development process goes on after me. So, I worried that I had unconsciously applied a preclinical framework to something that was not preclinical.

This wasn’t a new situation to me, I often write about areas in which I have zero hard-won intuition for. But in those situations, I almost always have people I can rely upon to help correct mistakes in my judgement, researchers and investors I can reach out to. In this case, I didn’t really feel like anybody fit the bill.

So, I publicly requested folks to contact me:

In total, I talked with five people, trying to learn more about the clinical trial lifecycle. Two people at a life-sciences consultant firm, one person who works in pharma quality assurance, and one scientist at a big pharma, and one person who runs an AI-agents-for-pharma startup.

They all said basically the same thing: AI probably won’t be super helpful at the clinical stage.1

Why? Let’s get into it.

A socratic seminar on why AI at the clinical stage isn’t helpful

The AI tool should be able to suggest follow-up analyses for the phase 2 asset you described earlier, right?

Sure, but would anybody care?

The first story I had mapped out earlier included a lot of intrigue, mystery, and scientific inquiry. But that’s not how it works in the real world. What I learned from talking to people in this space was that by the time a drug exits preclinical development, the shape of its development pathway is mostly fixed. This isn’t because of perfect foresight, but because there are too many institutional constraints for things to truly change course on the fly. Budget, headcount, protocol designs, statistical analysis plans, regulatory timelines, and so on. And if there are hiccups to the process, executives usually rely on one thing to decide their plans: finances.

Is this asset still worth spending money on? If it is, the next steps are usually obvious and don’t really need AI. Really, many clinical trial interpretations are mostly just a set of safety and efficacy criterion. If it isn’t worth spending money on or it doesn’t meet what you have in your checklist, you immediately move on to the near-infinite number of promising-looking things from your preclinical pipeline.

I think you’re being too pessimistic. There are cases of patient exclusions or dosages being refined being done in phase 3, based on results from phase 2, which directly disproves you. Why wouldn’t an AI agent help you arrive to those decisions faster?

I think it’s easy to get bogged down a little here. Let’s take a step back.

The drug development process has extraordinarily helpful mental model: portfolio optimization. At the very start of the pipeline, you have N assets. You have the option to spend $Y on each asset, each one having their own unique T percentage of working. And, of course, you hope to sell every asset for a unique sum of $Z. Thus, we have the very simple expectation equation:

Or, more realistically at a big pharma, across N assets:

This frames the entire endeavor as a capital allocation problem under uncertainty. So, in order for the AI to be fundamentally useful, it must do one or more of the following: improve T (chance of success), lower $Y (cost per asset), and better estimate $Z (total market).

So, let’s say that the AI assistant really is capable of helping clinical-stage drugs, mostly by increasing T, the chance of success. Whether that is in suggesting better patient stratifications or better dosages or so on, it doesn’t really matter. This will also cause one of three other things to happen:

Increase $Y, because whatever follow-ups the AI suggests will cost money to validate.

Reduce the size of $Z, by suggesting the exclusion of certain patient populations.

Make $Z disappear entirely by suggesting that the asset should be discarded.

This is all to say that, even if the model is giving useful ideas, it primarily acts as a cost center and functions via negative selection by highlighting risks or advocating caution, as opposed to positive intervention. This isn't inherently a bad thing! Avoiding costly failures can obviously be as financially valuable as pushing modest successes. But it is worth reframing AI at this stage as not a proactive, generative force, which its typical role in the preclinical lifecycle, but rather something much closer to risk management.

And, unfortunately, reducing risk at the clinical stage almost necessarily means you’re opting into a high-er false positive rate. Which is really, really uncomfortable for everyone involved, given the massive amounts of money potentially being left on the table at the clinical stage. I mentioned earlier that clinical trial interpretation is rote. Why is it rote? Because the stakes are so high and over such long timeframes that nobody wants to entertain ambiguity. Rote execution becomes a defense mechanism. You stick to the protocol, follow the SAP, report what was pre-specified, and keep deviation to a minimum. Not because it’s always the best science, but because any deviation invites delay, risk, or regulatory scrutiny.

To note: I don’t want to give the impression that I’m caricaturing pharma executives in any way. I think the pharmaceutical industry is amazing. Basically every person I’ve ever met who has worked at the clinical stages of drug development is deeply kind, cares a lot about science, and really, really wants to help patient. One need not look further than Alnylam Therapeutics as a prototypical example of a researcher tirelessly working for years to prove out an unproven and often-disparaged modality of drug.

So when I refer to those involved in the drug approval process as caring about money, I am not referring to them seeking to personally profit at the cost of patient lives. What I am referring to is them is having systemic pressures and tough choices to make in a high-risk, high-cost environment, and money being an extremely good tool by which they can create something useful. Science matters, efficacy matters (usually…), safety matters, and patient lives matter to those in charge. But the primary way by which society writ large allows people to care about those things over long periods of time is by aligning them with incentives. And in our current system, those incentives are largely financial.

So, someone in charge of developing a drug would ideally like to keep things standard.

Realistically speaking, how costly would any trial deviation be?

Great question! Let’s go back up to the suggestion that the AI had: running a set of immunoassays to check whether high-HGF patients did express more vascular inflammation biomarkers.

Let’s ignore the actual cost of running that experiment, since the cost would be the same regardless of whether it’d be done in preclinical settings or not. And let’s also say that the results came back, and that high-HGF patients did indeed show worse biomarkers, which implies that the adverse effect issue is maybe real.

Now, if you decide to still move forwards with the phase 3 with high-HGF patients excluded, you can’t just do that. You’d need to formally amend the trial protocol, revise the statistical analysis plan, and notify every regulatory agency overseeing the trial. That triggers a cascade. Updated investigator brochures, IRB re-approvals, new consent forms at every site, retraining for clinical staff, possibly renegotiated contracts with CROs. Depending on the scale of your trial, this might cost anywhere from $150K to $300K and delay your timeline by 3 to 6 months, at minimum.

But, you may say, didn’t the story say that the phase 3 trial wouldn’t go forwards if we had to exclude patients? Absolutely! And let’s say you decide to just drop the drug entirely because of the results. Well, wait a minute. A Phase 2b study that ended with statistically significant efficacy and only a flagged safety signal in a subgroup? This would raise red flags with investors, trigger uncomfortable questions at board meetings, and potentially violate prior commitments made during financing rounds. Remember, you’ve already spent tens, maybe hundreds, of millions getting this far. You don’t just throw it away unless the risk is clear and unresolvable. And if you do, you’d better be able to point to more than a few vascular biomarker deltas in a subset of 38 retrospective samples. So perhaps you end up running the trial with high-HGF patients excluded anyway.

The way you’ve set up the situation is such that no risk management tools could ever be sold to pharma. Which is almost certainly false.

Well, no, what I’m saying is that the risk management tool shouldn’t come at the clinical stage. It should instead come at the preclinical stage.

Brandon White, the founder of a predictive toxicology startup, has written what is likely one of the best ‘how to sell to pharma’ essays I’ve ever read. In it, he writes this: “The decisions that create the most value are choosing the right target/phenotype, reducing toxicity, and predicting drug response + choosing the right target population”.

This is correct! But there is some nuance here.

Though those decisions are the most value-generative in the abstract, the windows for actually making them are surprisingly narrow and almost entirely in the preclinical stage. Obvious for target selection! But it is worth restating for the other two. Even if the decisions your risk management tool hopes to impact are at the clinical stage, the tool must intervene in the preclinical stage.

In general, I think it’s good to remember this: nobody in that pharma executive meeting room wants another idea on how to interpret a trials result. Least of all one that arrives this late in the game, is expensive to validate, may hurt the economics of the asset, and hinges on a speculative mechanistic thread that can’t be resolved within the operational and regulatory box they’re already trapped inside. There’s a lot of hypothesizing and theorizing and experimenting at the preclinical stage, but clinical stages are almost always about trying to endure what is least painful.

And anything that AI brings at this stage will be fairly painful, even if the idea is helpful and rational to follow.

So pharma executives shouldn’t be modeled as rational agents?

Pharma execs should be modeled as rational agents! But remember the equation from before? Their objective function isn’t exactly ‘how do we improve the chance of success for this drug?’. It’s more “How do we maximize the expected value of the portfolio, given irreversible commitments, sunk costs, fixed timelines, risk thresholds, and organizational constraints?”. So, improving the chance of success for a single drug might actually decrease overall portfolio value, if doing so requires expanding the budget, delaying other programs, or decreasing morale (both in investors and employees).

For what it’s worth, the last point is surprisingly important. There are at least some drug trials that have gone on for no other reason than ‘we need to boost confidence’. While that drug is off failing to do anything, the hope is that the temporary boost in confidence would allow the scrambling together of something that has more promise. Irrational in the short term, but hopefully rational in the long term.

Again: I am not trying to disparage executives, who typically have the best of intentions. Their decision-making is almost necessarily going to be complicated + messy, and not as straightforwards as one may naively expect from the outside looking in.

I know that you are bullish on companies like weave.bio (automation for filing for clinical-stage assets) and convoke.bio (agentic software infrastructure for information gathering, documentation, and knowledge management at preclinical and clinical stages). How do you square this thesis with your optimism there?

Good question! I’ll offer some nuance.

I think the core difference here is that those companies are not trying to uncover fundamentally new information, you know? They are accelerating the gathering of pre-existing information into a coherent picture, with no promises on being able to deliver anything more than that. If we return back to the equation, they are not concerned with increasing T, they are really arguing that they can reduce Y by reducing man-hours spent on the necessary IND paperwork and market analysis and the like. And that’s far, far easier for people to be comfortable working alongside at the clinical stage, because advice given there can be easily validated and easily acted upon.

But if you are claiming to offer in new information that is difficult to validate, you can’t come in as late in the drug-cycle game as those other companies have the luxury of doing.

So, to summarize, if I have some tool that claims to improve T, I should mainly focus on applying them/selling it to people working at the preclinical stage? And never the clinical stage, because advice there is really hard to act upon?

Yes! Really, this goes beyond AI itself. This advice can be generally applied to anything that aims to serve pharma needs.

I think you are wrong.

Well, maybe. We’ll discuss the counterarguments to all this in the next-to-next section. First, let’s go back to how the story would’ve ended in reality.

How the story would actually go in practice

Rewinding the story, back to the HGF stratification bit and the ensuing meeting.

The safety signal is flagged. A few emails go around. Someone sets up a meeting with the project leads. They feed into the meeting room, review the results, and say ‘Well. Yeah. This sucks.”. Finance clears their throat and says, ‘our analysis assumes a broad market label, the already small patient population isn’t worth serving if we lose that. I’m unsure if it’d be worth allocating further capital to this.'.

Everybody is mildly annoyed, but sagely nods after finance pulls up a slide of the companies current runway.

Out of a desire to be thorough, you schedule a Type C meeting with the FDA to get their feedback. In the meeting you ask, very politely, whether the FDA would refuse a broad market label if you conducted a phase 3 with patient exclusion. They smile and say, “any safety signal observed in a clinical trial should be thoroughly investigated prior to initiation of a pivotal study,” the agency’s medical officer says, reading directly from their notes. You try to ask follow-ups. They smile again: “We evaluate the totality of evidence.”

You return back to the office.

Since your startup is a biotech startup with few assets, you worryingly invite your investors onto the call. They’ve seen this happen dozens of times in the past, and start discussing options. If you are unlucky, there will be no next funding round, now the hope is to simply recoup costs. Expensive R&D work will be immediately halted, acquisitions will be explored, and layoffs will start. You don’t take this personally, drugs fail all the time, and investors cannot be expected to pour tens of millions of dollars into an asset that is increasingly high-risk.

If you are lucky, the investors stick by you and tell you to go full steam ahead with the broad market phase 3. Why? So you can hedge against negative results by aggressively raising and working on the other promising things you have before the trial is over. And who knows? Maybe the drug worked fine after all and the HGF-biomarker thing was an artifact. Stranger things have happened.

And that’s it. There is relatively little room for o5/Gemini Pro Max V2/Claude 4.1 to come into the picture and offer their take on the situation. Because scientific insight was never really the bottleneck at the clinical stage, resources are.

But…

A steelman: what if this is all a cultural problem?

Generally, I think strong stances are useful to understand, but not useful to hold. So do I personally really, truly believe that AI won’t be able to really assist much with clinical stage assets? A little bit, but I can also see the opposite happening.

Some context: currently, nobody in these clinical-stage meetings have fantasies of actually understanding what a drug is doing inside of a body. It is a common fun fact that nobody quite grasps how therapeutics like, e.g., SSRI’s actually work, but that’s a universality amongst many active drug candidates. Those in pharma often know some basic fundamental facts about their compounds; that it is probably exploiting this-or-this target, that it is roughly this soluble, that it doesn’t have overly toxic chemical moieties, that it had so-and-so LD50 in mice. But anything past that is just too complicated to fully grasp. Whatever the ‘true’ effect of the molecule is incidentally learned from adverse effects during clinical trials.

I want to note that this is not a slight meant against anybody in those meetings! All of whom are typically extremely intelligent individuals. But that’s just the reality of how deeply complicated drugs are and how few resources there to deeply explore their effects.

This is partially why people are loath to hear any expensive ideas after the preclinical stage. Yes, it is hard to act on them, but perhaps more importantly, nobody actually trusts those takes or gives them much significant weight in their decision making. But is that an artifact of how limited humans are, and the biases we’ve created as a result of our inability to really understand in-vivo biology? Maybe there genuinely are useful signals that only a (pseudo) superintelligence could’ve observed from the data collected, something that would save any pharma company willing to listen billions of dollars.

Perhaps AI will really be important for clinical stage assets, but there will be a cultural shift required for people to accommodate the useful, but painful advice it has.

For the first time ever, there is a nearly infinitely, scalable source of intelligence that any biotech can repeatedly poke to gain more insight on their go/no-go asset decision. It makes some sense that the relative value of this information is iffy given how high stakes the situation is, but it cannot possibly be zero. After all, we have a proof point for painful ideation being useful at the clinical stage: biotech consultants, who are often called upon during clinical stages. Their job is to say the uncomfortable thing in a slide-deck-friendly way, and then vanish into the ether while internal teams decide whether to take it seriously.

It may just be that AI-based guidance hasn’t inserted itself into the clinical-stage pharma workflow yet in quite the same way. But perhaps one day it will. Once upon a time, no one trusted CROs with trial design. Then they did. Once upon a time, no one used EDC systems to collect clinical data. Then they did. The future comes, even if in the moment it seems implausible. AI as a guiding force in interpreting clinical data may very well go the same route.

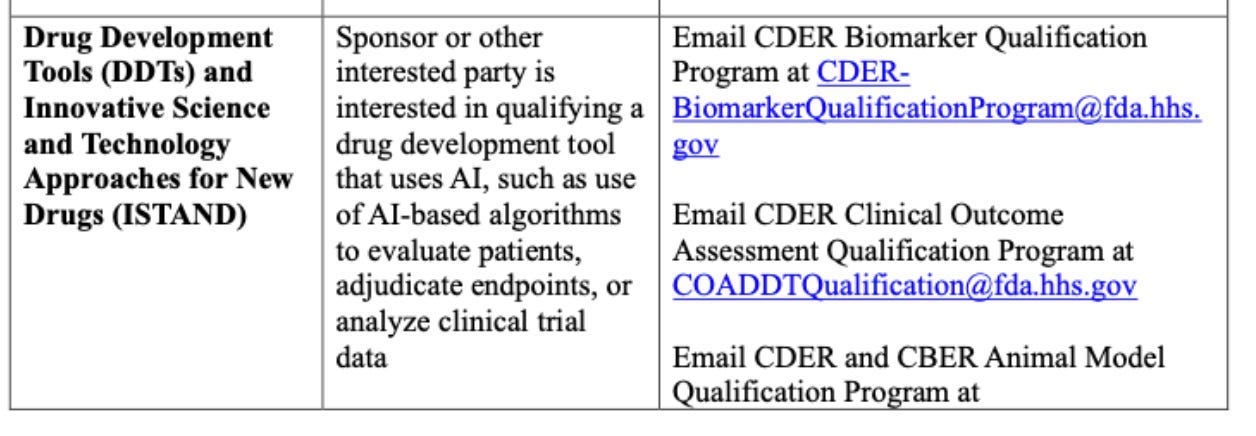

This perhaps will be especially the case as the FDA ramps up how much it plans to rely on AI in the coming years. Some more specific guidance on projects is given here, with this particular one being a reasonably strong (if it actually goes through) rebuke to this whole essay:

So, who knows? Maybe my entire argument is correct only if we consider where we are today, circa May 20th, 2025. Perhaps things are on the fast track to shifting entirely, and you’d be foolish to ignore the opportunities that are arising amongst clinical-stage assets. Alternatively, perhaps building for the clinical world means placing bets on a future that takes far longer to arrive than you can stay solvent.

Addendum: can AI today predict a phase 3 failure?

Bit off topic, but it is fun to wonder: what would be the most impressive thing a sufficiently powerful model be capable of? What could it to do to convince an old-school pharmaceutical company that perhaps they really should be getting advice from o5-mini?

In the most ambitious case, maybe it looks like being able to predict a drug failure before it happens! Let’s do a quick check as to whether one of the current models could’ve predicted one of the most well-known and unexpected phase 3 drug failure — Torcetrapib, a cholesterol-modifying drug developed by Pfizer in the early 2000s — given only information from pre-phase 3 studies.

Torcetrapib was intended to elevate HDL—the “good cholesterol”—with hopes it would revolutionize cardiovascular disease treatment. Pfizer invested billions of dollars, thousands of man-hours, and the hopes of millions of patients into this single drug candidate. Early clinical data showed exactly the cholesterol-boosting effects scientists had anticipated, prompting enormously optimistic forecasts from clinicians. In total, the drug cost roughly $800 million to develop.

Of course, we’ve already given away the ending of this story: Torcetrapib was a failure. During the infamous Phase 3 iLLUMINATE trial in 2006, investigators discovered, much to their horror, that Torcetrapib significantly increased the rate of cardiovascular events: the mortality rate of the Torcetrapib treatment arm was 60% higher than that of the control arm. The trial, which had enrolled over 15,000 patients, was abruptly terminated. Pfizer's market value plummeted overnight by billions of dollars, a stunning reversal for a company with a drug widely expected to become the industry's next blockbuster.

Were there early signs of its failure?

Well, early in Phase 1 and Phase 2 studies, a persistent side effect of the drug had already surfaced: mild elevations in systolic blood pressure among patients treated with Torcetrapib. Not dramatic, just an average increase of a few millimeters of mercury, enough to dismiss initially as an anomaly or perhaps a tolerable side effect, especially given the remarkable cholesterol improvements. Then, as Phase 2 progressed, another understated biochemical abnormality appeared. Patients on Torcetrapib had small yet consistent perturbations in adrenal hormones, notably increased aldosterone and cortisol synthesis. Potentially suggesting that Torcetrapib might be inadvertently disrupting pathways unrelated to its suspected mode of action. Future, retrospective analyses of the 2006 iLLUMINATE trial blamed these side effects on the dramatic mortality rate that Torcetrapib induced on patients.

But could any of it have been predicted purely via literature that existed before the results of the trial came out? Using only papers from before the phase 3 trial start date of 2006, I gave FutureHouse’s recent co-scientist platform — which people online seem to generally say is better than Deep Research — the following prompt. Specifically, I gave it to the ‘Falcon’ module, which will ‘produces a long report with many sources, good for literature reviews and evaluating hypotheses’:

Assemble together what you think of Torcetrapib, assessing whether it will succeed given in-vivo, in-vitro, and biochemical literature. Only use papers published from before 2006, assume you are a scientist before the results of the phase 3 ILLUMINATE trial. Do not assume the drug has already succeeded or failed.

Curiously, Falcon expressed some concern on whether the CETP inhibition of Torceptrapib (the primary mechanism by which it worked) had inadvertent off-target effects elsewhere. Exciting, because that is indeed why the drug ended up failing:

The intricate role of CETP in modulating not only HDL‑C but also LDL‑C and VLDL metabolism further complicates the prediction of torcetrapib’s net clinical benefit. Genetic studies have indicated that while CETP deficiency generally raises HDL‑C, the relationship with cardiovascular risk is not linear; in fact, some CETP mutations that lower HDL‑C are paradoxically associated with a lower risk of ischemic heart disease in certain populations (4.1). This paradox emphasizes that pharmacologically modulating CETP may have unintended consequences that depend on the balance between various lipoprotein fractions and the overall metabolic context. Thus, the potential success of torcetrapib hinges on its ability to fine‑tune this balance without triggering adverse alterations in lipoprotein functionality or promoting atherogenic particle profiles (5.1, 3.1).

Unfortunately, it did not mention the core off-target issue with the drug (raising adrenal hormones), only that off-target effects may exist. There are also some hints that the model was cheating at least somewhat, likely via reliance on some of its pre-training memory, since it also said this: In addition, early terminations of phase 3 studies, although not fully detailed in the pre‑2006 literature, hint at potential safety issues that could offset the biochemical benefits observed in smaller trials.

Of course, this model lacked the likely plentiful amounts of private, internal data stored within Pfizer on the drug; clinical and preclinical alike. Perhaps something even more interesting would pop out if models like Falcon were given access to such data!

I lied a little bit. Though most people generally agreed that clinical trial interpretation is usually very rote, one scientist (involved in clinical trial interpretations) disagreed with that characterization. They said that, in fact, the whole process is actually quite complicated, requires a fair bit of internal discussion, and tends to rely heavily on the literature. This is all to say: everything I’m saying here could very well be a very particular slice of the industry. Though patterns clearly emerge during clinical-stage work, and I’ve done my best to compile them, it would be easy to overfit. Take my slice with a grain of salt!

Haven’t finished the whole thing yet but I would read entire books written like detailed versions of section 2 lol

You provide pretty conclusive evidence that AI can't make better decisions than the current system, but isn't this also evidence that AI (perhaps with slightly-cynical prompting) can also do not-worse than the current system? And if this is the case, then AI might still be adopted eventually. A pretty substantial fraction of the expenses (both cash and equity) of a typical biotech will go towards the people who make such decisions. And at least some of the development time goes towards making such decisions. So adopting AI, even if not transformative, could still help reduce costs. (This does mean that making such an AI isn't a viable startup, since one can't charge more than the expected cost savings.) I guess the counterpoint is that having smart, respected, and experienced people making these decisions is necessary to gain confidence from the investors and the company staff. But the counter-counterpoint is that, depending on how public perception of AI evolves, people might eventually trust AI even more for these decisions.